Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Kinetix has created state of the art Machine Learning models to make animation creation as easy as it should be.

Kinetix AI allows for single camera motion capture. Our AI deep learning algorithms can extract any human motion from any single camera video.

Kinetix AI will analyze the video sent by the player, identify the human that's appearing, and read their movement, in 3D (recreating depth). Once the movement is fully analyzed, it will be recreated in 3D to generate an animation file. Then, the animation is retargeted on any custom avatar.

Kinetix tracks body movements at specific positions and then name them at each critical joints (e.g. feet, ankle, knee, shoulder, elbow). To get the best final output, Kinetix AI is making predictions accurate at the centimeter scale.

Hands movements are even more complex to detect but nothing’s impossible! At the moment, Kinetix AI is spotting if the fists are clenched, hands and fingers are opened or fingers are detached (celebrating victories ✌️for example). More complex hands movements are still hard to detect but Kinetix keeps improving its models at large scale.

Kinetix AI can also detect and isolate half body movements while keeping the other half static during the transposition process.

Which means you can create both lower body or upper body isolated animations while outputting a full body animation in the end.

Facial motion is currently not recognized by our algorithm.

Stay tuned! The feature is being developed and will arrive soon.

Kinetix provides a User-Generated Emote feature to boost your game's engagement and revenues.

Welcome to the Kinetix documentation. You will find tutorials and technical information to help you navigate our technologies and integrate our User-Generated Emote feature in your game or app.

With the User-Generated Emote feature, players can create a custom emote from any video, directly in the game, and play it on their avatar. All it takes is a phone camera or any video for Kinetix's AI to create and play an animation in-game.

Kinetix's User-Generated Emote feature is designed to be seamlessly integrated into any of the most popular game engine available on the market.

To integrate Kinetix's User-Generated Emote, you can choose from 2 options:

An all-in-one, plug-and-play solution with the Kinetix SDK

A more flexible solution with the Kinetix API

Choose the integration method that fits your needs best:

Kinetix's emotes meet with very strict standards to maximize their compatibility with any game.

Kinetix has carefully benchmarked every emote available on the market to come up with these emote standards that match any avatar-based game constraints to ensure an optimal gamer experience (example: avoid collisions) and the full compatibility of any emotes distributed through our technologies.

Name: Every Emote has a unique name.

Mature content: Y/N, useful metadata for moderation purposes

Emote duration: Kinetix emotes have a duration in between 1 to 10 sec

Emote Icon: All Kinetix emotes come with an associated icon for an optimal emote listing and distribution

Emote Gif: All Classic Emotes come with an associated gif for an optimal apprehension of the Emote

Emote ID: A unique ID composed of 36 characters to identify any Emote available.

We boost video games' engagement with an AI-powered User-Generated Emote technology.

Established with a passion for redefining the way players connect, express, and engage, Kinetix have embarked on a journey to enhance the gaming experience. We believe in the power of AI to serve in-game self-expression, customization, socialization and limitless creativity.

Our mission is to empower game developers to unlock the full potential of their game. We're here to provide them with the tools and resources they need to elevate player engagement, keep the games fresh and dynamic, and immerse their players in social, customizable, and expressive gaming world.

We believe that players too, can be creators, through UGC. There is no greater tool than generative AI to empower players with fantastic creation opportunities, without requiring any technical difficulties. By giving players the tools to craft their emotes, Kinetix fosters a vibrant and inclusive community where player expression is celebrated.

To achieve this vision, we believe that there is no better asset than Emotes!

We developed our AI-powered User-Generated Emote technology to enable any game or virtual world to benefit from the potential of UGC. Empowering players with the possibility to craft emotes increases engagement, socialization & customization in-game and unlock new revenue streams.

The User-Generated Emote feature is essentially an emote creator to be embedded directly in-game, allowing players to craft custom emotes from any video.

Let your players create & play custom emotes directly in your game, using our AI-powered User-Generated Emote feature. Integrate it with just a few lines of code, and boost your game's engagement as you empower your players with unlimited expression capabilities.

Kinetix technologies include a proprietary retargeting algorithm that automatically transfers the motion of the emote to any human avatar in your game.

Our cloud-based technology is designed to enable an unlimited amount of Emotes to be played simultaneously without altering the game performance.

Also, multiple players can create and/or play User-Generated Emotes in the same time, without damaging their in-game experience or your servers.

Once onboarded with our User-Generated Emote feature, you can monetize the created emotes the way you want, if you want to! You possess all rights to monetize every emotes distributed via our technologies to your users.

Kinetix's User-Generated Emote feature adapts to your revenue model and unlock various monetization opportunities based on emotes.

In-game stores: Integrate User-Generated Emote creation tokens in your in-game store. Monetize User-Generated Emotes and make them exclusive and rare items that only your top players can showcase.

Loot boxes and crates: diversify the content of your loot boxes by offering User-Generated Emote creation tokens.

Battle Pass: Fill your Battle Pass with valuable items including User-Generated Emote tokens to diversify the content of your Pass.

Leveraging Kinetix's User-Generated Emote offers a multitude of compelling reasons to enhance your gaming experience and player engagement. Here are the key benefits:

Enhanced User Engagement: Emotes create memorable and shareable moments for players. By empowering them to express themselves with custom moves through their avatars, you cultivate a sense of personalization and social interaction, leading to increased player engagement and retention.

Immersive Gameplay Experience: User-Generated Emotes add a new layer of immersion to your games. They allow players to express emotions and interact with the virtual world in a more meaningful way, enhancing their overall experience and emotional connection to the game.

Cutting-Edge AI Technology: Kinetix's User-Generated Emote technology is driven by state of the art AI, setting it apart from traditional emote systems. Embracing AI technologies early makes your game a precursor!

Future-Proof Your Game: As AI continues to advance, Kinetix AI Emote technologies position your game at the forefront of innovation. Embracing AI-driven technologies lays the foundation for integrating AI-generated assets, worlds, and experiences into your gameplay, keeping your game relevant and exciting for years to come.

Effortless Integration: The Kinetix SDK is designed with simplicity in mind. Integrating our SDK into your existing game engine is seamless, saving your development team valuable time and resources. It allows you to focus on creating compelling gameplay and content while we handle the complexities of emote management.

Monetization Opportunities: UGC can open up new revenue streams for your game. With our technologies, you can offer players the chance to customize their experience and invest in their in-game identities.

Continued Support and Updates: By leveraging Kinetix's User-Generated Emote, you gain access to ongoing support and regular updates. We are dedicated to refining our technology and adding new features, ensuring that your game remains at the forefront of the industry.

Each Kinetix emote created by a player using the feature comes with a rich metadata structure for an optimal listing and perception from you and your users:

Every emote created with the feature can be played with any humanoid 3D avatar.

In case your avatars have a specific or complex morphology, our proprietary might be applicable to avoid inter-penetration while playing any emote.

1. Integrate Kinetix technologies into your game

or

2. Leverage Kinetix technologies at Run Phase

and

Learn more about our AI UGC technology

Integrate the SDK

Integrate the API

Manage UGC Emotes

API References

Support

Turn your players into creators with our AI-powered User-Generated Emote feature!

With the User-Generated Emote feature, players can create a custom emote from any video, directly in the game, and play it on their avatar. All it takes is a phone camera or any video for Kinetix's AI to create and play an animation in-game.

This documentation page will guide you through the exciting possibilities of user-generated emotes and how to integrate them into your gameplay.

User-Generated Emote is a unique UGC feature that enables players to create a custom emote from a video, directly in the game.

The feature relies on state-of-the-art deep learning AI algorithms that turn videos into animations. Thanks to this pioneering technology, players can bring any movement from a video into their game.

Boost your game's engagement as you turn your players into creators and allow for more content generation, self-expression, and customization.

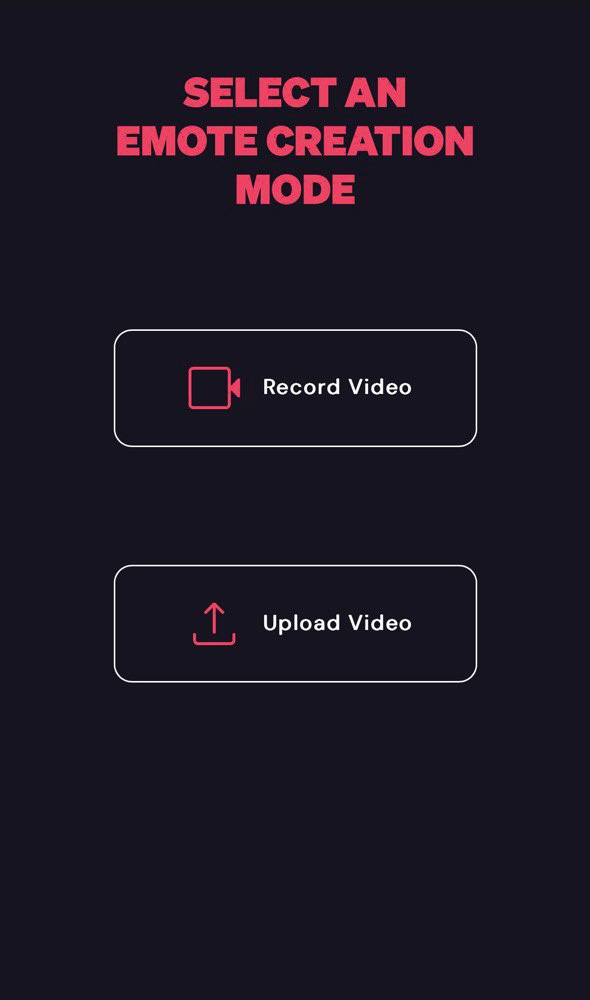

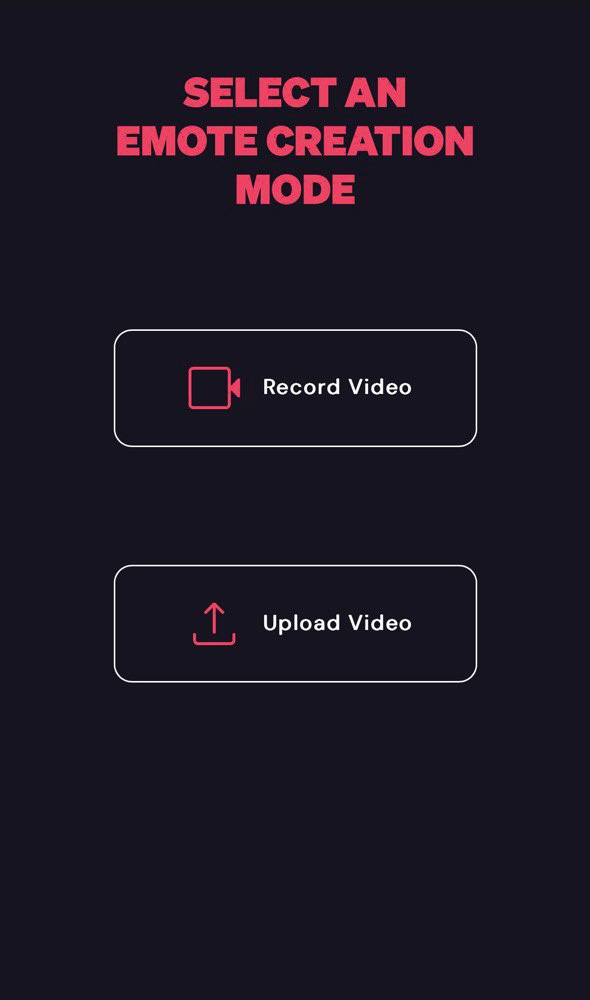

Players can upload videos from different sources:

Upload from device: it lets players pick a video stored locally on their device.

Upload from the Internet: it lets players upload videos by simply pasting an URL link, allowing players to create an emote from a TikTok or Instagram video for instance.

Self-record: players can directly use their phone camera to film themselves as they perform the movement they would like to turn into an emote.

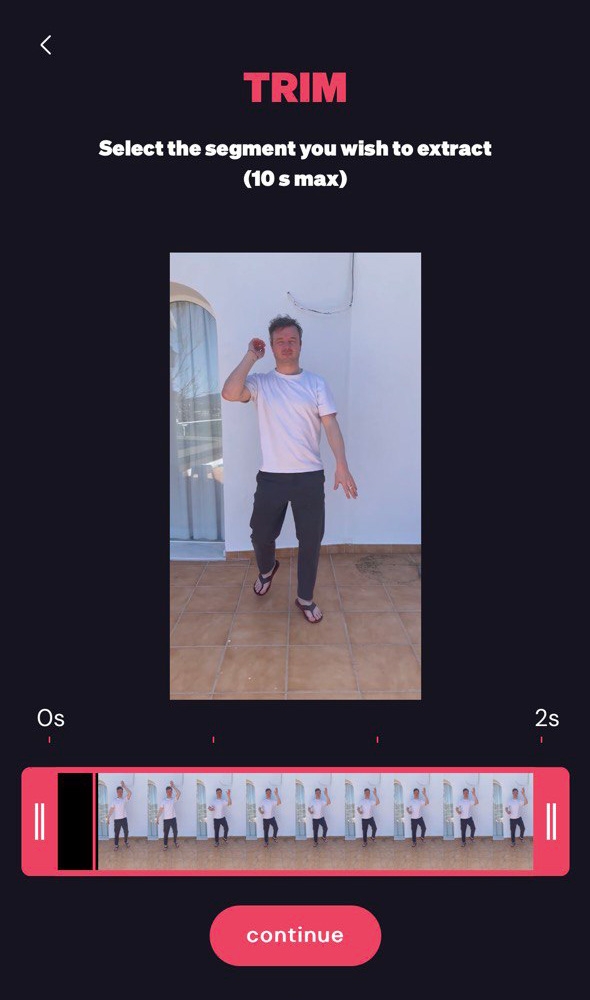

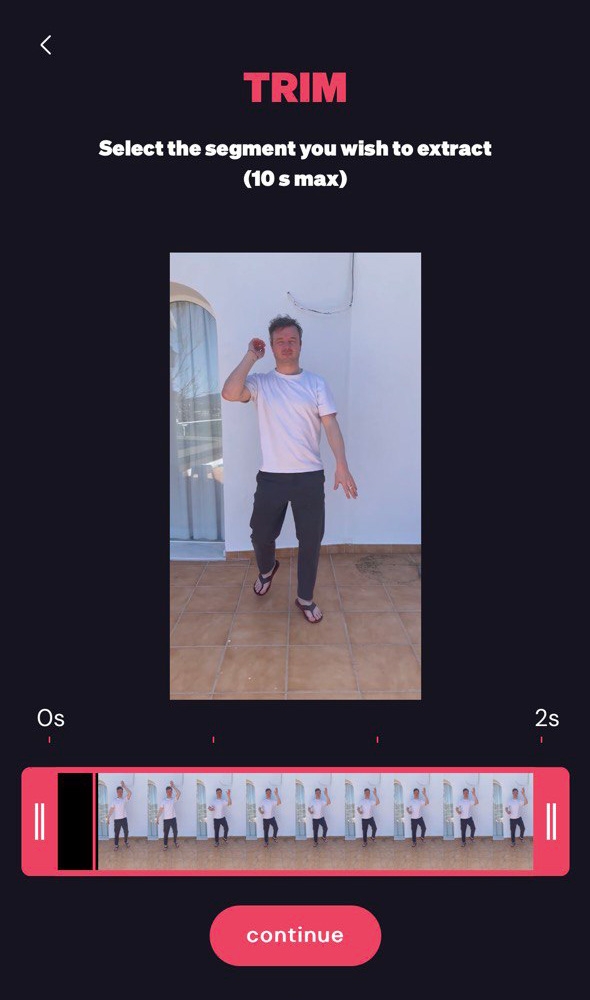

Players can easily select the exact moment containing the movement they would like to turn into an emote. Likewise, they can crop their video to remove elements that could mislead the AI.

Video cropping and trimming allow players to optimize their video without requiring any video editing skills or software.

Since its inception, Kinetix has always thrived to democratize access to 3D animation creation with AI. To do so, the team has designed state of the art machine learning models that are seamlessly integrated into game-oriented product bricks to make emote creation as easy as recording a video.

The overall User-Generated Emote process can be synthetized in 4 main steps:

Kinetix AI will analyze the video sent by the player, identify the human that's appearing, and read their movement, in 3D (recreating depth).

Learn more about Kinetix's video-to-animation AI technology:

Kinetix designed a Moderation layer to prevent players from adding unwanted content in the game. It consists in analyzing the video sent by the player, and removing any prohibited movement detected.

Kinetix has designed a robust and comprehensive moderation system to review and approve user-generated emotes while keeping you in the loop.

Once the video has been approved by the moderation, the movement extraction begins. Our AI will create an animation file containing the exact same movement as the one detected in the video. The 3D animation file will be created on Kinetix's standard avatar.

Since players want to play their custom custom on their avatar, Kinetix AI needs to apply the generated animation to the player's avatar.

At Kinetix, we developed our own proprietary retargeting technology to guarantees full compatibility with any humanoid character.

Our automated retargeting not only adapts to the avatar's skeleton like any other traditional retargeting method, but also considers the character's mesh, which allows our AI to adapt the adjust the generated emote based on the avatar's specific body shape.

Learn more about Kinetix's retargeting technology:

Bring Your World into the Game: Players can infuse their in-game experience with a personal touch by creating emotes from content they love and are passionate about. This direct control over in-game expressions allows players to integrate their personal interests, favorite moments, or trending content into their gaming world, making every emote a personalized statement.

Creative Freedom: Offering players the ability to craft emotes from their chosen content empowers them with unparalleled creative freedom. This not only enhances their attachment to the game but also encourages a sense of ownership and pride in their in-game representation.

Foster Community Interaction: Custom emotes become a new medium for players to communicate, share, and celebrate with each other, strengthening the game's community and social bonds.

Encourage Continuous Play: The novelty and enjoyment of creating and using personal emotes motivate players to return regularly, maintaining high levels of engagement and long-term interest in the game.

Community Co-Creation: This feature fosters a collaborative environment where players contribute to the game’s evolving culture. Shared creativity leads to a dynamic and ever-changing community, bonded over shared interests and collective content creation.

Transformative Entertainment: The fun of translating real-life movements into game-specific emotes adds a layer of entertainment that’s both engaging and immersive, keeping players invested in the game.

Viral Potential: Players are likely to share their innovative emotes on social platforms, drawing attention to the game and attracting new players who want to partake in the fun and creativity.

You can now integrate as many User-Generated Emotes as you want without any downside on your games performances nor player immersion.

Integrate User-Generated Emotes seamlessly into your gameplay experience, allowing gamers to create, own and use their custom emotes for any interactive or social activities.

Enrich your gameplay with User-Generated Emotes features and Implement features such as emote sharing, liking, and commenting to encourage social interaction and engagement.

Make User-Generated Emotes an exclusive asset and monetize accordingly. We strongly recommend to consider UGE as the top tier in terms of Emote rarity.

The pricing associated with Kinetix User-Generated Emote pipes is per API call. We charge with a fixed price every time our APIs are called, which corresponds to every emote generated by a player.

A standard pricing of €0.10 per emote generated by your players will be applied.

There are 2 ways to unlock User-Generated Emote features in your game:

With Kinetix SDK: all-in-one solution that includes the access to the feature, in addition to multiple technologies to optimize the integration and run phase experiences. Kinetix SDK

With the Emote Creation API endpoints: access the emote creation feature only. You have to create your own infrastructure around the feature to support it. Kinetix API

User-Generated Emotes unlock a world of creativity and self-expression for games and their players, elevating the gaming experience to new heights. By embracing user-generated content, you can create an inclusive and dynamic gaming community that thrives on player participation and engagement.

Even though the feature is made for players to create custom emotes in-game, you can try the feature on the Kinetix Developer Portal.

To discover how you can try the User-Generate Emote feature on your custom avatar, you can follow this video guide:

STEP 3: in the "Try UGC Emote" section of the Developer Portal, click on "create an animation", and then on "From a video".

Once your video has been sent, our AI will need a couple of minutes to process the User-Generated emote.

STEP 4: preview your user-generated emote on your custom avatar!

Check for more info about our moderation approach.

Learn more about .

We implemented a demo version of the User-Generated Emote feature so that you can preview what the feature will look like for your players on your avatars. This demo version is accessible online, on the Kinetix .

In this page you will learn how to try the User-Generated Emote feature for yourself. Players should never have access to your Developer Portal. If you want to integrate the feature in your game, head to the corresponding section: - (SDK) - (SDK) -

STEP 1: log into your .

STEP 2: in the "Avatar Management" section, click on "upload an avatar" and check the bone mapping. If you have any questions about this step, head to to find the dedicated tutorial.

When uploading a video make sure you meet the . Note that your players will have to follow the same best practices in your game.

If you need any information about the tool's specifications, you can learn about it .

In case an error happens, please .

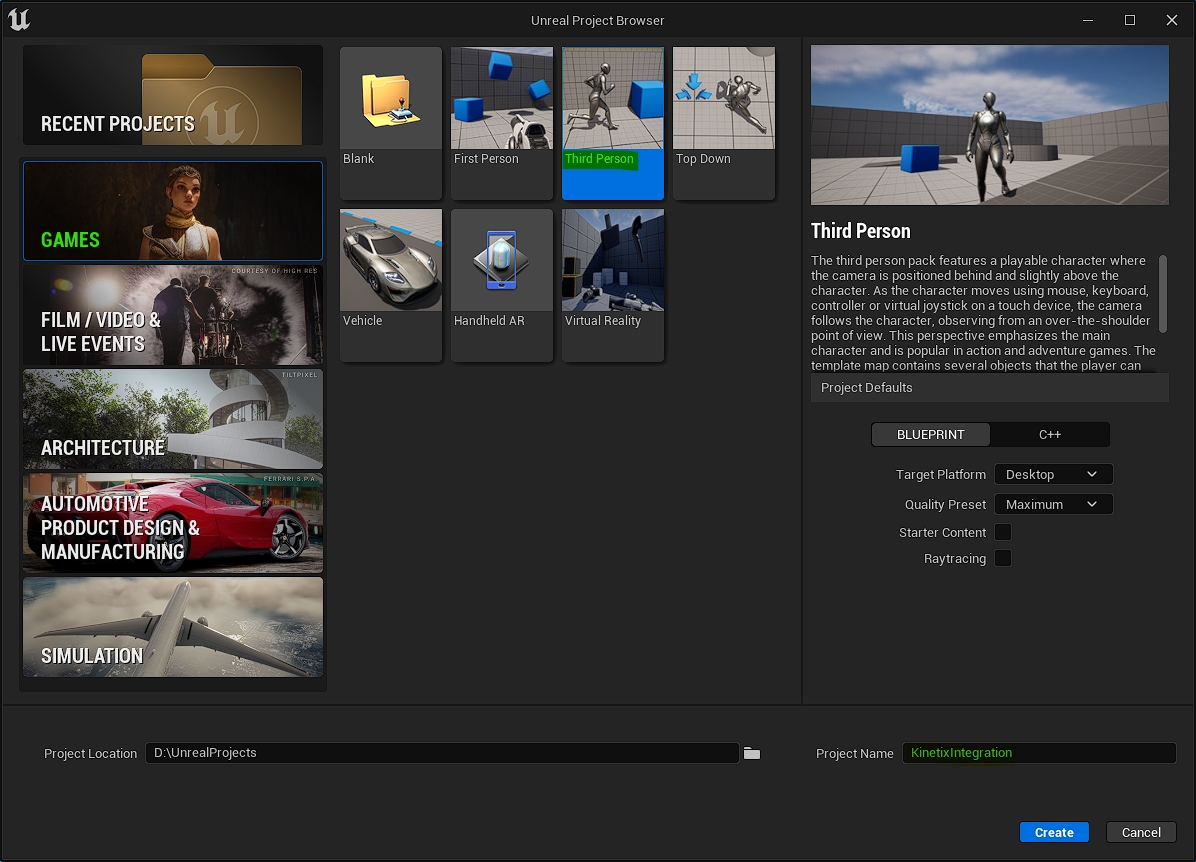

The Kinetix SDK has been carefully tested and approved in various environments.

Unity 2020.3 LTS to Unity 2023.2.20f1

PC, Mac, WebGL, Android & iOS

Unreal Engine 5.2, 5.3, 5.4

PC & Mac

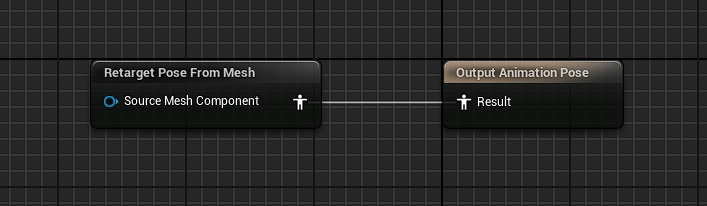

With Kinetix's proprietary retargeting algorithm, emotes created using the User-Generated Emote feature can be played on any humanoid avatar.

Emote retargeting is a powerful technique in the world of 3D animation. It allows you to transfer an animation from one avatar to another, regardless of their size, proportions, or skeletal structure. Instead of creating a unique animation for each character, emote retargeting streamlines the process by adapting a single emote to fit various avatars.

Each character in a 3D animation typically has a skeletal structure with bones and joints. All the Emotes from the Kinetix Emote Library and User-Generated Emotes are created for a specific and standard character with a standard skeleton.

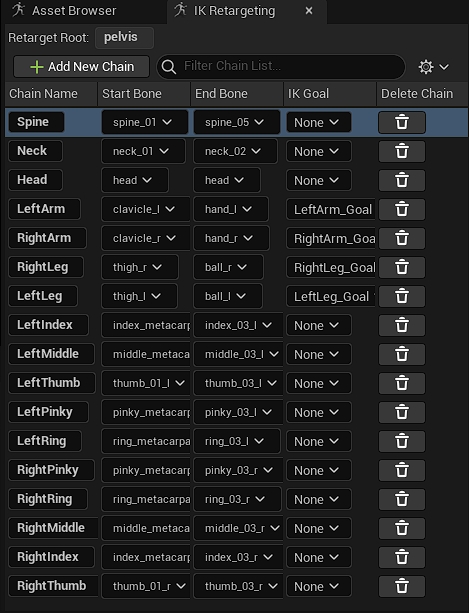

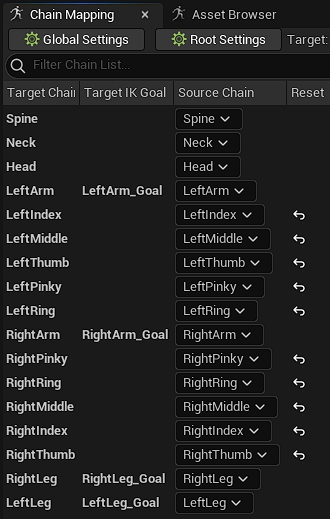

Kinetix's proprietary algorithm is able to transfer the motion from Kinetix's standard avatar to the target's avatar, almost fully automatically. The algorithm will proactively identify the correspondence between the bones of the target skeleton and those of the source skeleton, and the user will then need to confirm and correct errors if necessary. Once the Bone Mapping is done, Kinetix's Retargeting Algorithm will adapt the motion to the target avatar's bones proportions to ensure that the movement is smooth and great.

Here is what the Kinetix Standard Skeleton looks like:

Bone Mapping is a crucial, semi-automated part of the retargeting process. It allows our AI to understand the target's skeletal structure and adapt the motion from the Kinetix Standard Avatar to the target avatar.

In a matter of seconds, our algorithm presents the results of this mapping, allowing the user to review and fine-tune the automatic bone mapping. This empowers users to correct any incorrectly assigned bones and map any unassigned bones, resulting in precise skeletal adaptation for animation, thereby saving time and ensuring animation accuracy.

When target avatars feature intricate designs, such as unusual bone structures, very particular bone lengths, complex morphologies, or incorporate wearables, it can potentially lead to issues like inter-penetrations that diminish the overall output quality.

To address this concern, Kinetix has devised a unique retargeting method that factors in the character's mesh, proactively anticipating and mitigating contact errors. In this process, the algorithm strategically positions 45 key points among the character's vertices, effectively capturing the character's comprehensive morphology.

After correctly positioning the key points, our algorithm takes charge of the retargeting process. As usual, it seamlessly transfers the motion from the source's joints and bones to the target's skeletal structure, but this time, the motion is optimized while adhering to the constraints set by the key points.

Here is an output sample that highlights the value of the Contact-Aware retargeting:

Kinetix SDK is designed and optimized for any Unity based game.

For a quick integration, you can follow an accelerated flow:

When a user , Kinetix's Bone Mapping algorithm will analyze the skeleton of the character and identify his bones and joints. Our AI will try to figure out which bone from the uploaded skeleton matches the original bone from the source skeleton, and repeat this identification process for the whole skeleton.

STEP 1 - DOWNLOAD THE SDK & SET-UP UNITY

STEP 2 - CONFIGURE THE CORE PACKAGE

STEP 3 - INTEGRATE THE USER-GENERATED EMOTE FEATURE

The SDK contains a sample scene for you to test the User-Generated Emote feature. The sample scene is obviously optional: you don't have to download it to activate the User-Generated Emote feature.

To let you create user-generated emotes in your game for testing purposes, Kinetix developed a sample scene that is an optional part of the SDK. It will guide you through the whole emote creation process so you can get started with the User-Generated Emote feature integration.

By clicking on the "Let's create an emote!" button, you will access an adapted version of the Progressive Web App (PWA) your players will use to create emotes. It the test version you have access to, you can only upload videos from your desktop (while players can also record themselves or paste a video link from the Internet).

Follow the flow, and upload a video to create an emote.

Disclaimer: the "Create" tab and the emote wheel are not included anymore.

To integrate the User-Generated Emote feature, you can choose between the Kinetix SDK or the Kinetix APIs. This page will help you find out which one suits your needs best.

API & SDK: functionalities comparison

Additional work that remains once you've integrated the API or the SDK

Pre-requisites to integrate one or the other

Next steps to integrate

With the SDK, the request (for exemple the video to process) will come directly from the end user.

For the API, Kinetix will receive these requests from your game server that will take them from the users.

Having the SDK directly communicating with players' clients offers strong benefits:

Reduced Latency: Direct communication between the Kinetix servers and the players' clients can significantly reduce latency. Without a game server acting as an intermediary, data travels a shorter path, resulting in faster response times and a smoother user experience, especially crucial for real-time interactions and animations.

Lower Server Load: By bypassing the game server, you reduce its workload. This can lead to lower operating costs, as the game server has fewer data processing tasks, potentially decreasing the need for extensive infrastructure and reducing the likelihood of bottlenecks during peak usage times.

Simplified Architecture: Direct SDK integration simplifies the overall system architecture. Fewer components in the communication chain mean there are fewer points of failure, which can enhance system reliability and ease the maintenance and troubleshooting processes.

In both cases, the compute of the video is run on Kinetix's servers, never on your servers nor the end-user's computer.

By using the SDK, you also have access to a real time retargeting that can be used to play an emote that is not known yet by our system.

If you integrate the SDK, you will be able to access our pre-built mobile Progressive Web App (PWA). Players can access the PWA on their phone directly from the game by scanning a QR code. The PWA allows players to upload the videos they want to turn into an emote and send it to Kinetix. You can modify the UI/UX of the PWA as you want.

If you integrate our technology with the API REST method, you will have to build the PWA on your own.

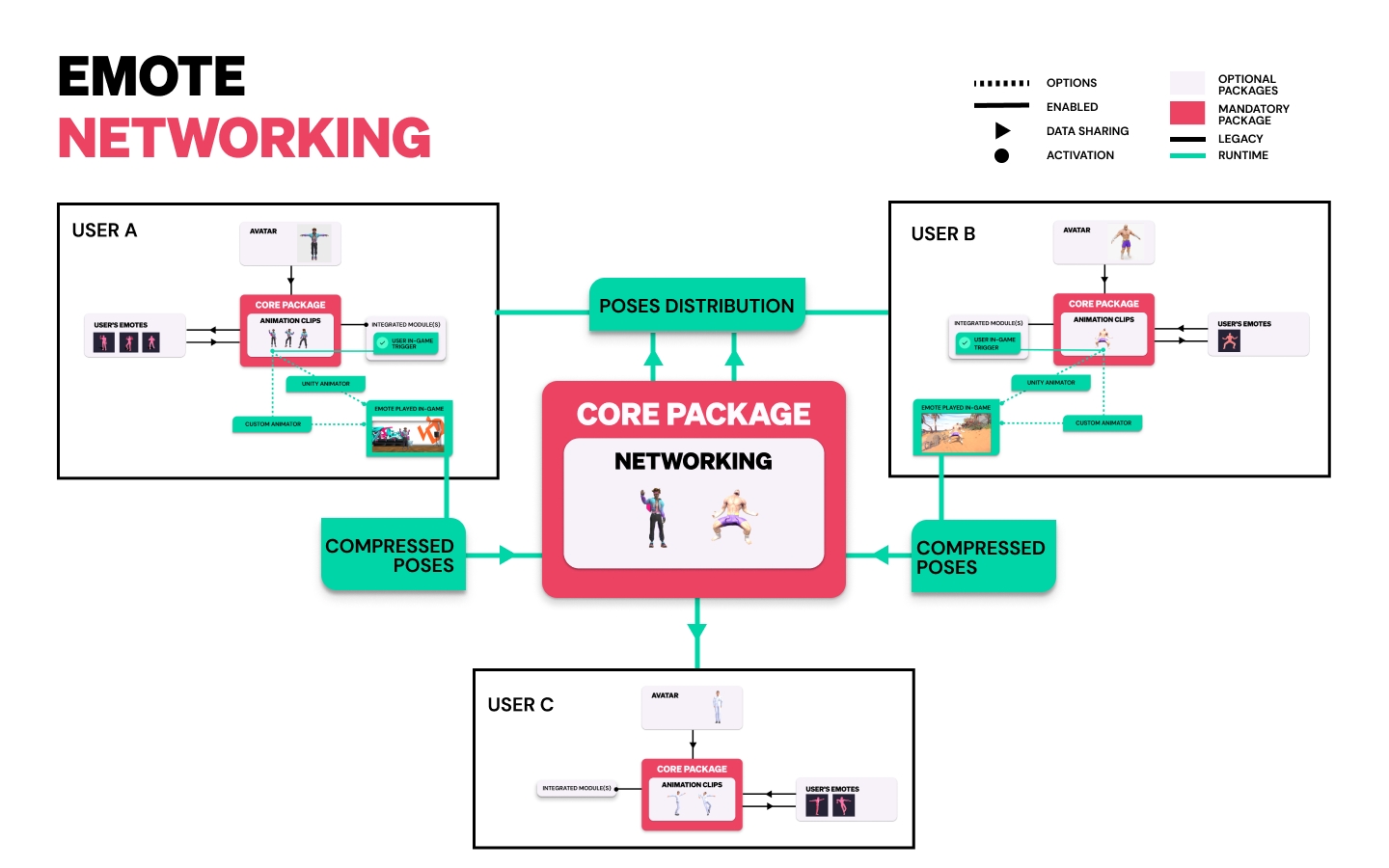

Once the video has been transformed into an emote thanks to our technology, it is vital to send that emote in real time to every other users that will need to see it. This is taken care of in an optimized flow in our SDK, but that will need to be re implemented if you choose to integrate the API.

The networking system included with the SDK includes:

RAM optimizations:

Cloud storage: Since players cannot store ALL the user-generated emotes played by all the players, emotes but be stored on a cloud server.

Emote streaming: emotes are streamed into the game, not to overload players' RAM when a lot of user-generated emotes are played simultaneously. Emote streaming consists in dividing the emote files in small chunks, to allow players to download emotes chunk-by-chunk, instead of downloading multiple entire emote files. Downloading small chunks of multiple different emotes allow players to see multiple emotes simultaneously without any lag.

Server & player clients transfers optimizations:

Send only the files players need: sending EVERY generated emote file to EVERY player would result in unreasonable amounts of data transferred. The SDK only sends the emote files when the players need it. For instance, when a player plays a user-generated emote, only him and the players nearby will stream-download the required emote file.

Smart caching: our SDK includes optimizations to allow players to store on their RAM some emotes that are quite likely to be played again, in order to avoid multiple transfers of the same emote file in a short range of time.

In-game experience optimization: emote streaming and smart caching enable players to create, play, and see multiple user-generated emotes simultaneously. Without these two combined bricks, it's very unlikely that players would not experience lags and delays when a very high number of emotes are played in the same time in a local room (unless there's a way to pre-load emote files in your game).

If you choose to integrate the User-Generated Emote feature using the Kinetix API method, it is likely that you have to replicate some parts of the SDK's networking system, if not all of it.

Kinetix helps you take care of the moderation of User-Generated Content added to your game.

Kinetix designed two moderation layers to ensure optimal safety for your game:

AI Moderation: the videos sent by the players are analyzed with AI. Any unwanted movement will be removed when the emote file is generated.

Integrating the User-Generated Emote feature with the SDK or the API will both let you access the UGC Management platform we have designed on the Developer Portal.

Only games that integrated the Kinetix SDK can access the AI Moderation for now.

The SDK does not require any additional work from you for the User-Generated Emote to work in your game: the SDK is a complete infrastructure with prebuilt solutions. You may still want to customize the UI/UX, obviously.

After you've integrated the API, you still have to handle:

The system to allow the user to upload the video to be turned into an emote Video Uploading Management

The network system that will allow everyone in the room to see the emote in real time Management of Emote Networking

Follow the flow from these sections to integrate the SDK or API.

In this page, we will help you install the Kinetix SDK's Core Package for Unity.

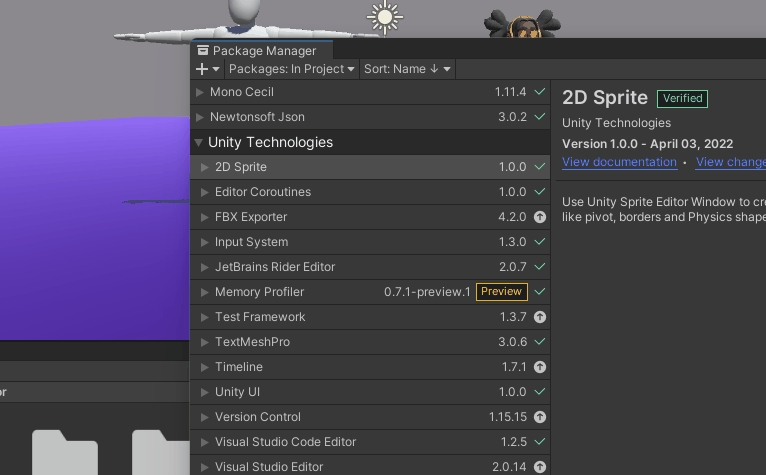

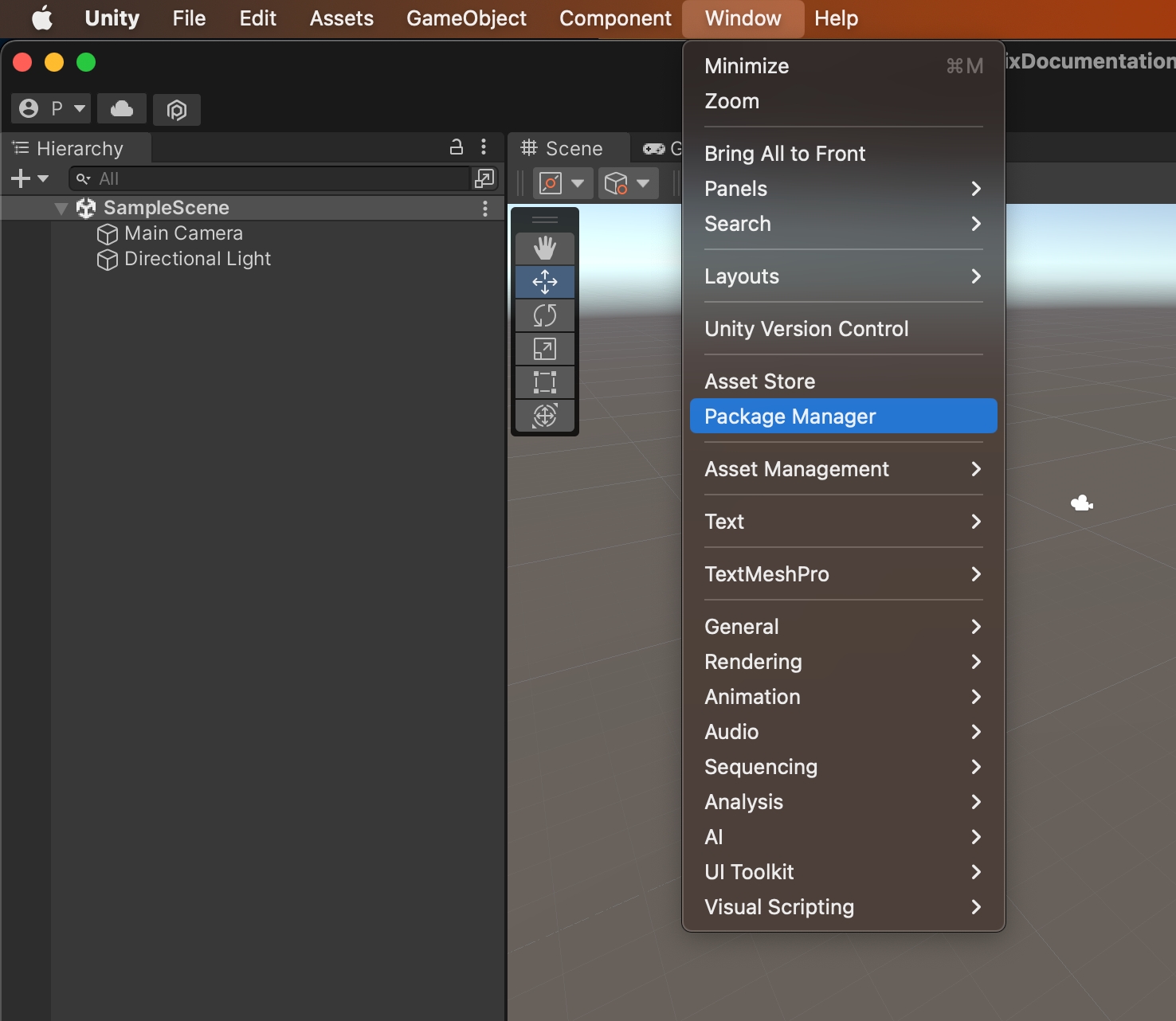

Open your Unity project and open the Unity Package Manager Window on "Window > Package Manager".

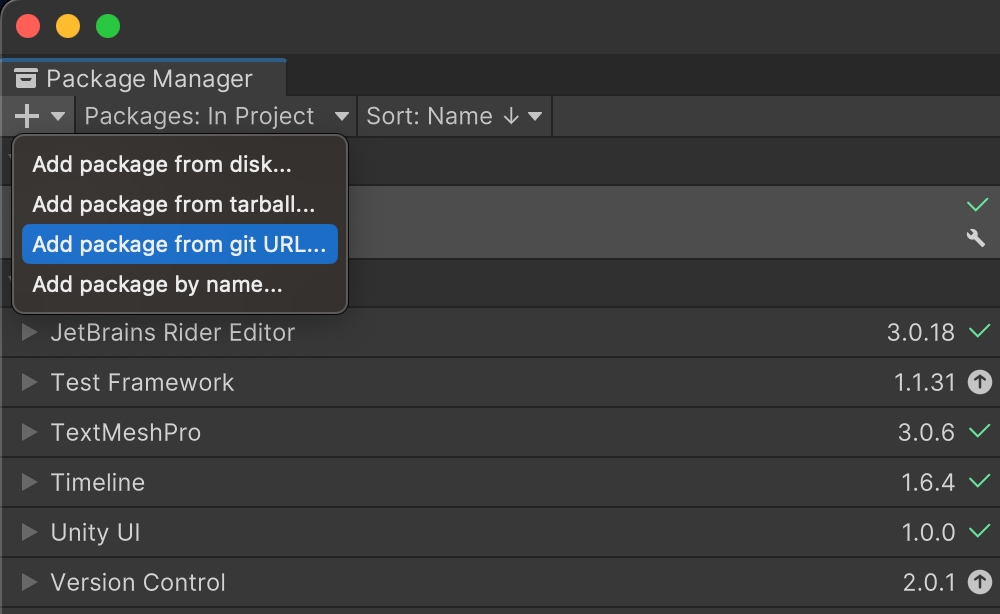

In the Package Manager window click on the " + " icon in the top left corner and select Add Package From Git URL.

Paste in this URL and click "Add":

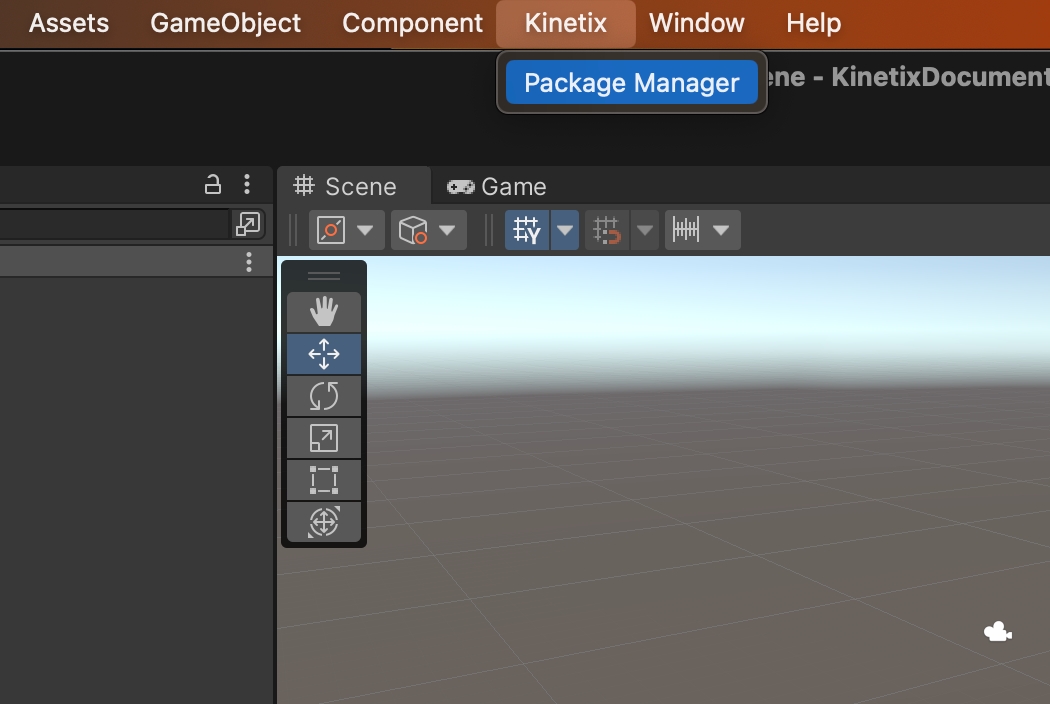

In the Kinetix window, click on Package Manager.

Click on "Install Core Bundle"

The Kinetix SDK is a plug-and-play solution to integrate our User-Generated Emote feature and all the Kinetix technologies we have developed around it.

The Kinetix SDK is a powerful cloud-based toolkit that lets you leverage our advanced User-Generated Emote technologies easily.

The SDK Integration can not be cumulated with the API Integration. Please, make sure that the SDK Integration is the most appropriate integration method for you by visiting the dedicated page: SDK or API: what to choose.

The Kinetix User-Generated Emote feature allows your players to create, share, and socialize with their own unique emotes whatever the game environment, adding a personalized touch to your gaming experience. Learn more about the User-Generated Emote feature.

The Kinetix SDK has been built around the User-Generated Emote feature, with simplicity in mind. The purpose of the SDK is to provide all the technologies, features, and scripts to integrate seamlessly the User-Generated Emote into any game.

The SDK's Core Package includes all the mandatory technologies and features a game needs to implement and leverage the User-Generated Emote feature at its fullest potential.

Kinetix has developed its SDK to be easily plugged with any avatar-based 3D project:

No external server stream dependency. We share data packages your netcode can interact with. No need to be always connected with Kinetix's servers (learn more: Kinanim (Emote streaming)).

It works with all animator systems. We generate animation at runtime for any avatars. You can play it by default as we override the animator’s system or plug it to your custom system (learn more in Animation System - Unity & Animation System - UE).

The SDK matches with your design. Every modules' UI are provided as examples, you can customize them and create yours from scratch.

Kinetix SDK is designed to seamlessly interface with any existing game infrastructure without interfering with Client or Game tech capabilities:

Kinetix servers are handling most of the effort with content creation, storage, management, and optimization.

Clients only carry the SDK - Core Package modules, essential to the SDK.

Game server only handles the relay of serialized pose.

In both cases, you will be able to have the user-generated emotes directly retargeted on your users' avatar(s). To do so, you have to upload your avatars on .

Feel free to if you are not sure about what networking system your game needs.

UGC Management platform: on the Kinetix , once the User-Generated Emote feature is integrated, you will be able to manage the emotes generated by your players.

If you need help to choose between the SDK and the API, do not hesitate to contact our technical team! You can reach out to , he will get quickly back to you!

Before you go further, please ensure that you have installed all the dependencies from our .

to always play high-quality emotes on any humanoid avatar, with constant level of performance.

to deliver a great in-game experience by dynamically managing memory and synchronizing the avatar poses.

to easily manage the way emotes are attributed to your players, and synchronize metadata.

To start leveraging the Kinetix SDK in Unity, you will need to install Git and all the required dependencies.

Dependencies are not included in the Unity's package.

To import dependencies, access the Unity Package Manager (Window -> Package Manager), click the "+" button, and select Add Package from git URL.

Here are the dependencies to import (copy and past the links) :

Newtonsoft (Compatible with any version)

[com.unity.nuget.newtonsoft-json]

Input System

[com.unity.inputsystem]

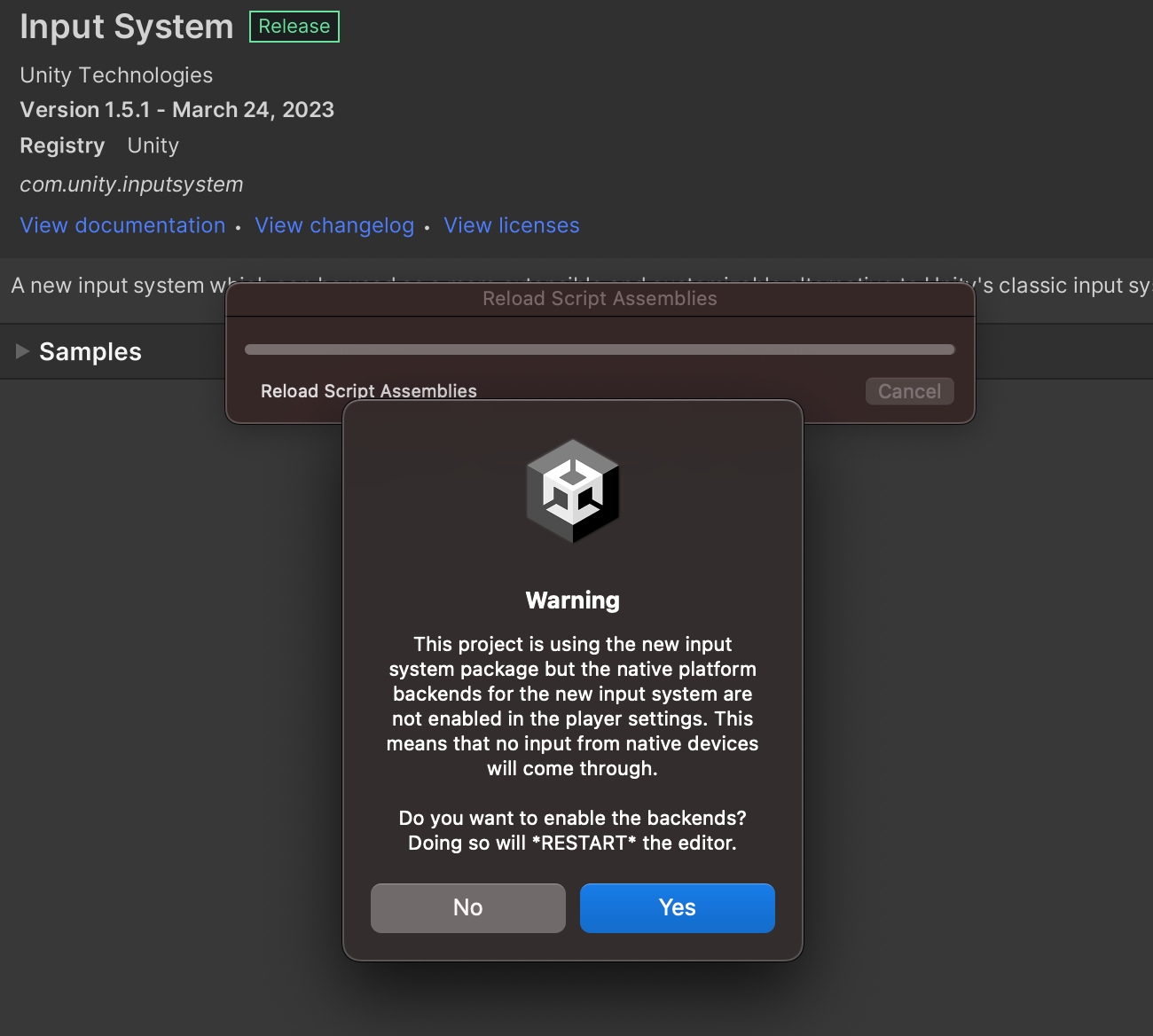

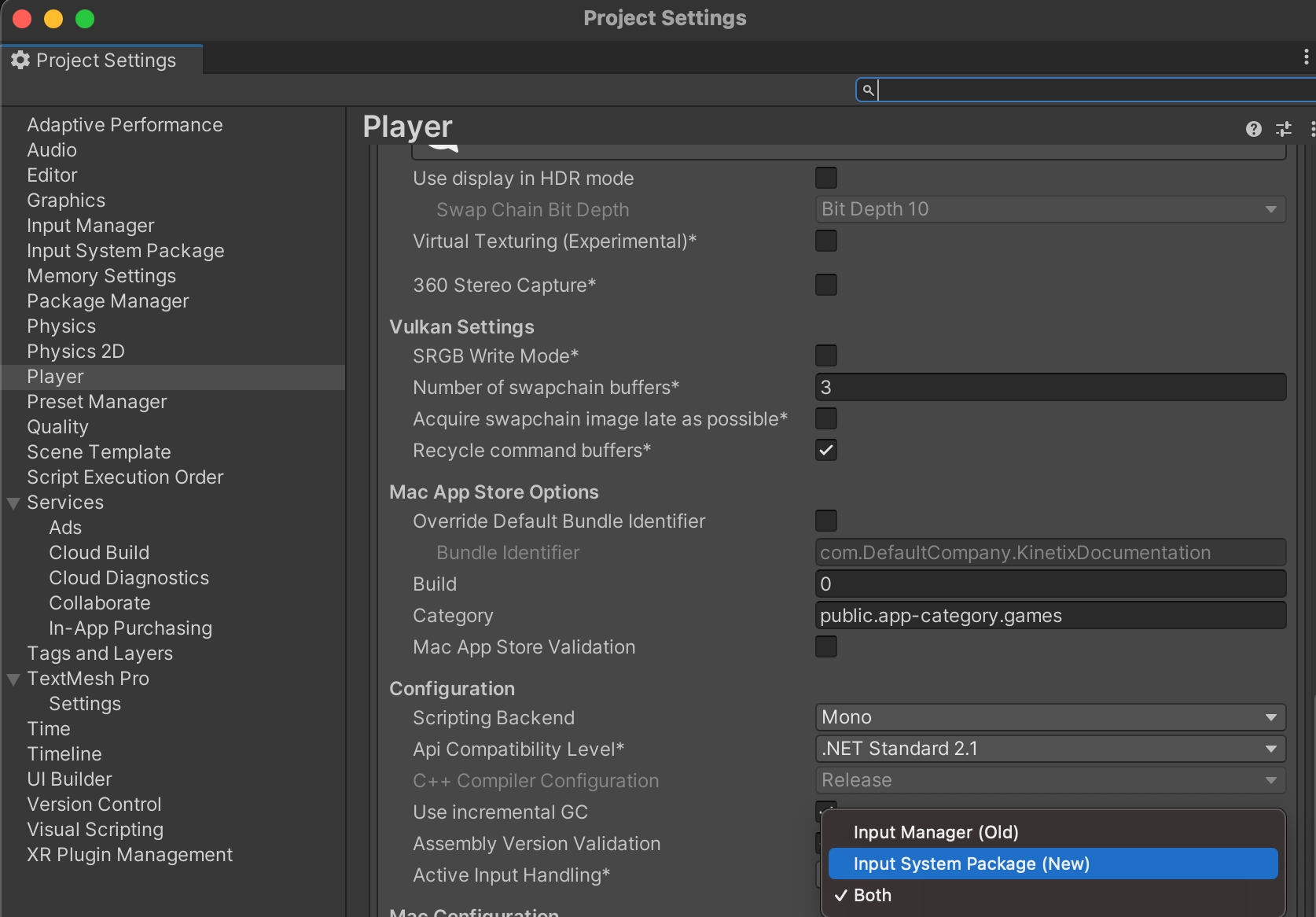

While adding the Input System package, you may have a warning popup, select Yes.

As our UI uses new Input system, verify that your "Active Input Handling" is set to "Input System Package (New)" or "Both" in "Edit/Project Settings/Player/Other Settings/Configuration"

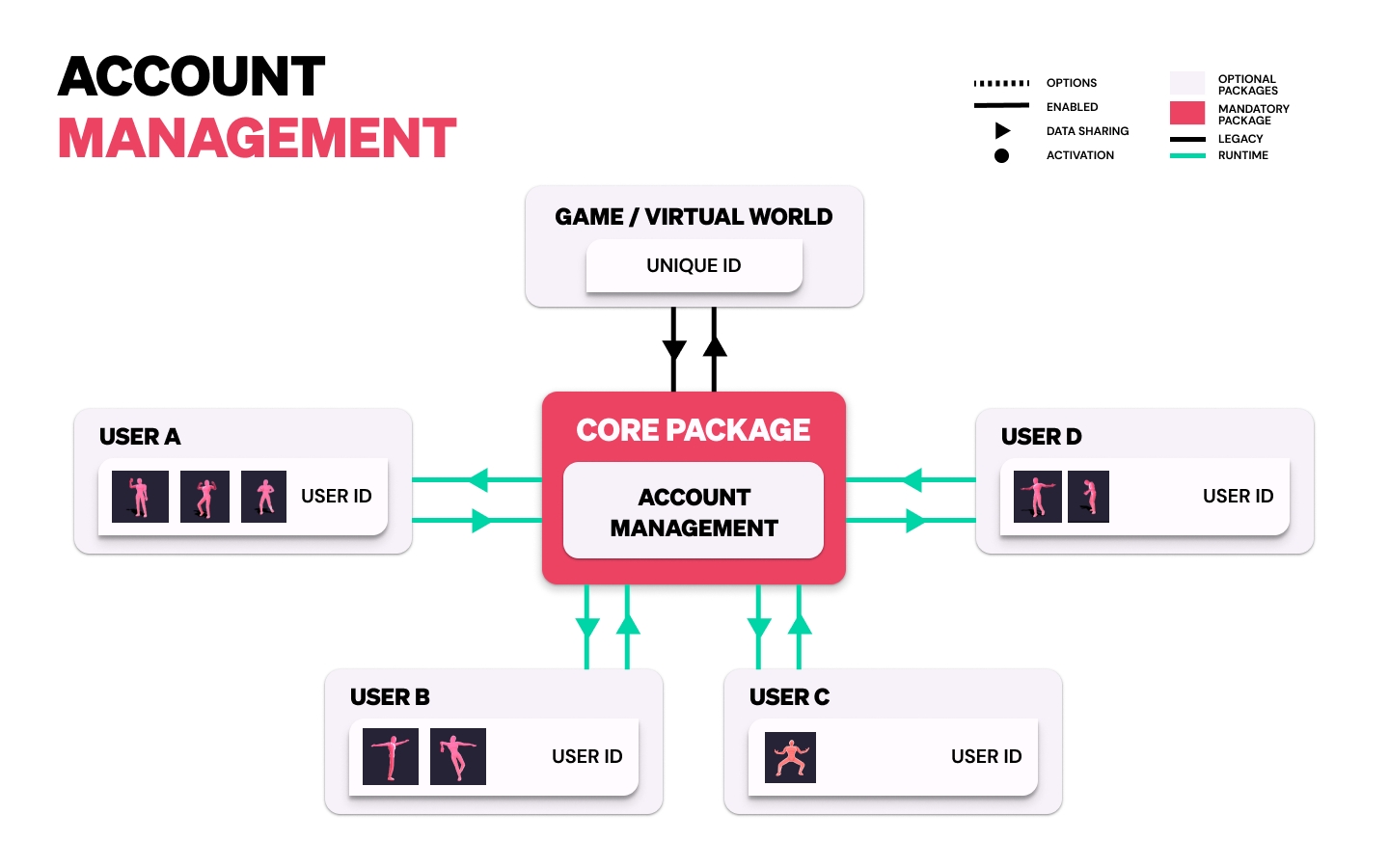

Learn how to manage your players' accounts and their Kinetix emote content.

You need to create a unique and immutable _UserId for each of your users. You can for example use the UserID of the distribution's platform, but it can also be any unique chain of character of your choice.

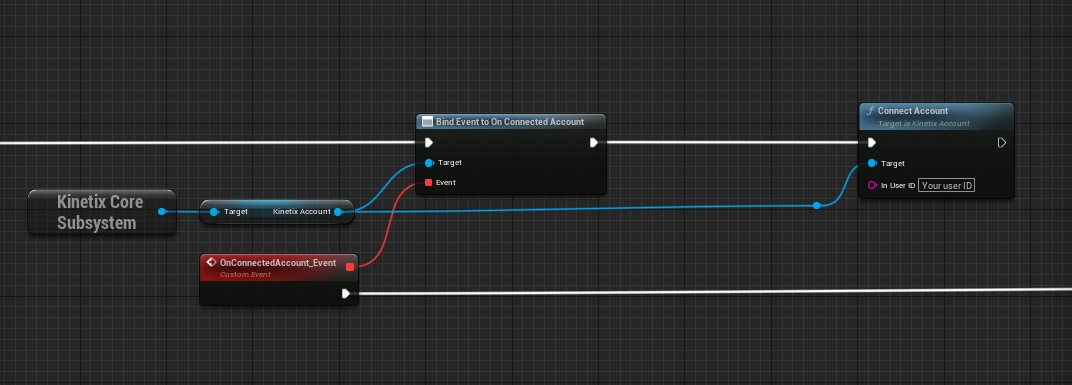

Each time an user logs in with their email or username in your game, call:

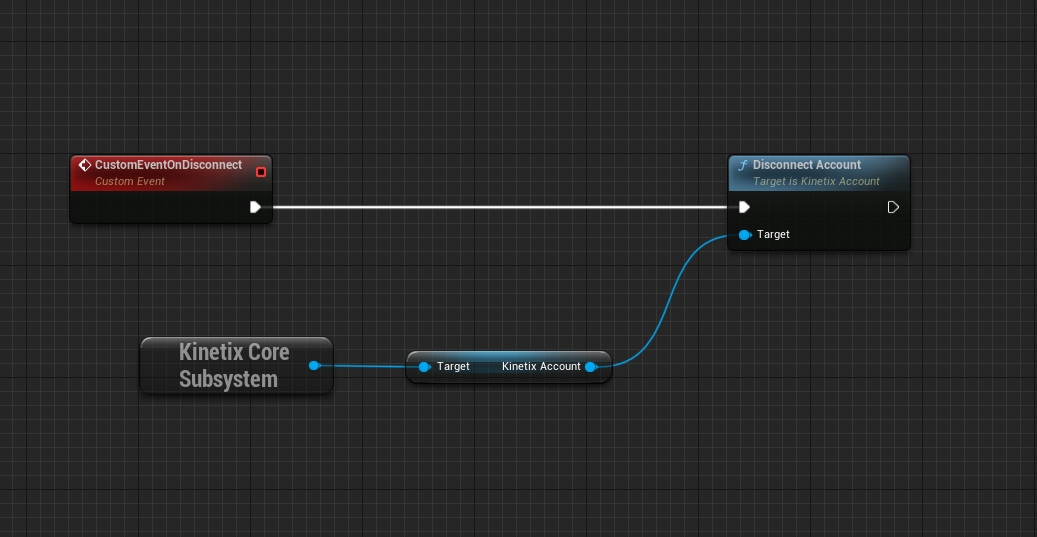

And each time an user logs out, call:

The account module of our SDK only accepts one user at the time. Any attempt to log in another user will disconnect the previous one.

You can retrieve manually the user's emotes through the API of the core package via this method :

Please note that the number of Kinetix emotes fetched for a single user is currently limited to 1000 emotes

Check that your Unity version is supported .

needs to be installed to fetch the Unity packages through the Unity Package Manager.

Prepare the Kinetix's SDK integration in Unity.

Check out the tutorial below to get quickly started with the Kinetix SDK integration in Unity. For the Chinese version, click !

The Kinanim format is engineered to elevate gaming experiences and optimize in-game performance, through intelligent emote streaming, efficient caching, and advanced retargeting capabilities.

Kinanim is a groundbreaking file format developed by Kinetix, designed to revolutionize the way emotes are streamed in multiplayer games. It introduces a smart network-based caching mechanism that optimizes bandwidth usage, reduces server load, and enhances the overall gaming experience. With Kinanim, Kinetix can efficiently stream a large quantity of emotes, providing players with a seamless and responsive emote experience. Furthermore, Kinanim incorporates emote retargeting, making it a powerful all-in-one tool.

Network-Based Emote Streaming: Kinanim uses a network-based streaming approach to efficiently deliver emotes from Kinetix's servers to the SDK. It streams emotes in smaller, sequential portions, reducing the need for simultaneous data transmission of entire emotes.

Optimized Bandwidth: The network-based approach significantly optimizes bandwidth usage, ensuring that emote streaming is efficient and does not consume excessive data. Also, Kinanim alleviates the server load by selectively sending emote segments as requested, resulting smooth Emote stream for multiple simultaneously played emotes.

Great Responsiveness: Our system enables close to instantaneous responsiveness in emote streaming, allowing players to use emotes promptly without noticeable delays.

Kinanim operates by streaming User-Generated Emotes from Kinetix's servers to the SDK, which will manage the attribution of the emote to the player's cache. When a player plays an user-generated emote, his client will not directly receive and read an animation file, but will rather receive portions of it through the SDK, including the specific character (avatar), that are transmitted via the network to their device in real time. Also, when a player plays an emote, the surrounding players will also download the played emote retargeted on the player's avatar so that everyone one will see the emote correctly.

In addition to this mechanism, the SDK includes a valuable feature that caches the most recent emotes played by the player. This feature from the SDK saves these emotes in the player's cache, reducing the need for repeated streaming of recently used emotes. By efficiently caching recent emotes, the SDK optimizes the player's experience and conserves both bandwidth and server resources.

This network-based streaming minimizes bandwidth usage and server load, ensuring that the emote is displayed promptly and without interruptions.

No need to hardcode thousands of emotes in the game, which would result in very heavy game files.

All networking is handled by Kinetix (costs & know-how)

Kinanim ensures that emotes are displayed promptly and without interruptions, enabling players to interact with one another in real time.

Players can own and play thousands of user-generated emotes with a simple push of a button. They do not necessarily need to select a few emotes to embed in an Emote Wheel as in Fortnite, for example.

With Kinanim, game developers can take their multiplayer gaming experience to the next level by providing efficient and responsive network-based emote streaming with emote retargeting, enabling players to customize their emote experience without affecting the game's performance.

Initialize the Kinetix Unity SDK, has to be done to be able to call its API

The initialization of the Kinetix SDK should only be done ONCE when the app starts

If you have an error saying that the Kinetix namespace can't be found, find the Kinetix Core package in the Project tab and right click on it > Reimport

You can initialize the SDK with parameters in the KinetixCoreConfiguration :

GameAPIKey => Game API Key, obtainable via Dev portal

PlayAutomaticallyAnimationOnAnimators => true if you want the SDK to handle the animator.

EnableAnalytics => true if you want to help us and share general informations of how users use our SDK.

ShowLogs => true if you want to log the behaviour of the SDK for debug purposes.

EnableUGC => true if you want to enable UGC

NetworkConfiguration => Customize network configuration

CachedEmotesNb => Number of emotes cached in storage (0.5 MB / emote)

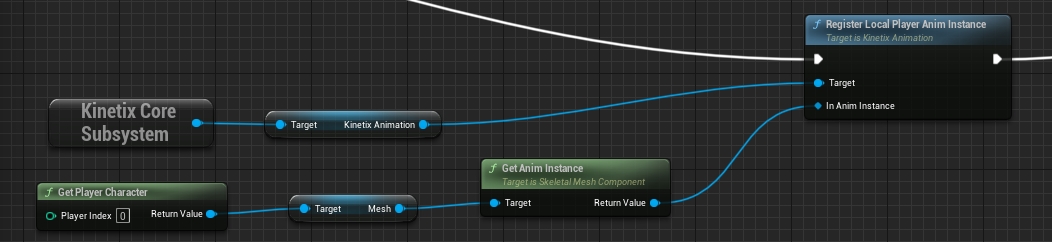

SDK Integration if you are using the official animator system from Unity.

Our SDK offers functions in order to handle smoothly Unity's Animation system.

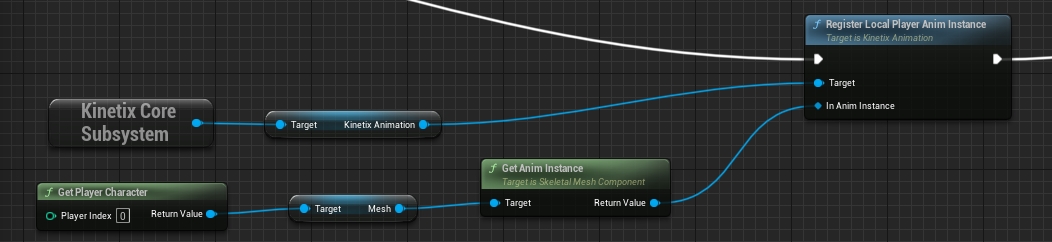

To register/unregister your local player animator :

To stop animation on local player :

To register/unregister remote peer animators :

Then, when registering your local player, you can pass the UUID of one of your uploaded avatar, this will allow the SDK to automatically use your custom retargeted emotes.

In addition to the local player or remote players, you can also register other avatars (to animate your NPCs or shop avatars for example)

The registering of the avatar returns a string representing the unique Id (UUID) to pass as parameter to the subsequent functions.

You then load the animations with the avatar UUID

And play the animation with

To get the Duration of an emote, you must first get a KinetixClip object. You can easily get it via the code sample

Please note:

You can have the duration in the OnSuccess callback

The OnComplete callback ensures the full clip is available

Learn more about our Animation functions in

Learn more about our Network functions in

First, check to get the UUID of one of your uploaded avatar.

Learn how to seamlessly integrate the Kinetix SDK with your own Animation System for Unity.

To register/unregister your local player avatars:

Get callbacks to get informed when an animation is played on local player:

To retrieve AnimationClip Legacy to and play it in your system for your local player:

To register/unregister remote peer avatars:

Get callbacks to get informed when an animation is played on remote peer:

To retrieve AnimationClip Legacy to and play it in your system for remote peer:

In addition to the local player or remote players, you can also register other avatars (to animate your NPCs or shop avatars for example).

The registering of the avatar returns a string representing the unique Id (UUID) to pass as parameter to the subsequent functions.

You then load the animations for the avatar UUID:

And play the animation with:

The SDK's Core Package includes all the mandatory technologies and features a game needs to implement and leverage the User-Generated Emote feature at its full potential.

When designing our SDK we focused on developing features that make you save time and allow you to easily integrate the User-Generated Emote feature within your game. Each of the Core Package modules are flexible so they match with any dev environment.

Learn more about the SDK's 3 Core Modules inAnimation system, #smart-networking & Account management.

When loading a humanoid avatar, Kinetix's Core Package calls the associated user-generated emotes to handle the retargeting. The process consists of downloading the GLB file of the emotes and retargeting them with the registered avatar to generate Legacy AnimationClips that perfectly fit your avatar(s). The retargeting process is optimized to keep a constant frame rate of your application. Each time a new emote is loaded, it is added in the queue of the retargeting module and perform N actions per frame.

To avoid the conflict between generation of humanoid AnimationClip at runtime and the Animator, we developed a proprietary Animation System composed of "KinetixCharacterComponent", "ClipSampler" and "BlendAnimation" scripts. They are automatically added at the GameObject (Unity) / Actor (Unreal Engine) of your animator when you initialize your SDK.

That way, when a player triggers a user-generated emote in-game, our Core Package samples at runtime the pre-generated AnimationClip. It guarantees to always play high-quality emotes with constant level of performance. Once you have registered your animator, (Unity's animator, Unreal Engine's animator or custom animator) this process becomes seamless.

Adding dynamically and playing multiple emotes simultaneously can be very tricky issue: in the vast majority of games, it's not possible to store thousands or millions of user-generated emotes in player's cache or to hardcode the emotes files, which leads to storing emotes on a cloud.

The concept is to cache the Peers ID by registering them via our SDK. A KinetixCharacterComponent is added to local and remote players. Using a simple implementation, you will be able to get the current pose of the local player and apply it to the remote player.

To ensure real-time communication between clients our solution compresses the volume of messages that are shared by only serializing poses from played emotes. This technique is +200 times more lightweight than sharing emotes files, and allows multiple players to play and see user-generated emotes simultaneously.

Managing and distributing the right content to the right gamer usually takes a lot of time and implies custom developments.

Kinetix's SDK include an Account management solution to fetch user-generated emotes and their data with players while guaranteeing their data privacy. It facilitates content distribution and monetization for game studios / developers while ensuring that players will easily retrieve their user-generated emote in their inventory in-game in a seamless way.

This Account management module also lets you track user-generated emotes usage metrics and optimize emotes distribution in your game accordingly (all metrics accessible from Developer Portal).

One of the many challenges developers face when integrating emotes or animations in game is to play them on any avatars. It is even more complex when a game or 3D digital world includes different avatars, with eventually different skeletons. At Kinetix, the user-generated emotes are created on Kinetix's Standard Avatar. To play them on your custom avatar, we have developed a proprietary algorithm that transfers any 3D animation (emote) to any 3D humanoid character, and fits with any animator system (Unity, Unreal Engine, and custom animator systems).

Head over to & to learn more and integrate.

Head over to to learn more. Configure the network for your game: - -

In this section, you will learn how to initialize the Kinetix's SDK Core Modules in your Unity application with our Core functionalities.

Synchronise the Kinetix emotes for your players with our server agnostic solution.

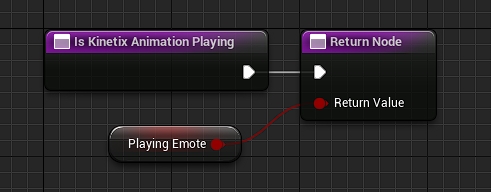

When you play an animation, the event KinetixCore.Animation.OnAnimationStartOnLocalPlayerAnimator will be fired.

It allows you to use a callback to then pass the ID of the emote played to your network layer, and, using a RPC or a Sync Variable, to trigger the animation on the KinetixCharacterComponentRemote (see below).

For more information about the whole strategy, you can visit Kinanim (Emote streaming) and Emote networking & caching.

Once a remote peer connects in your same room or is already connected in the room, you can register them by giving their peer network IDs as argument, and their animator:

Then get and store the automatically added KinetixCharacterComponent

Once a remote peer disconnect in your same room, you can unregister them by giving in argument their peer network ID.

Once a the local peer disconnect of the room, you can unregister all remote peers.

We differenciate 2 types of KinetixCharacterComponent: Local and Remote. It's today mostly for semantics, as a Remote KCC will have the same capabilities as the Local one, but represents a Remote Peer.

Get your Game authentification API Key from Kinetix's Developer Portal to link your game with Kinetix technologies.

There are 2 types of Keys:

Limited - Free key used for tests and pre-production purposes. You won't be charged anything using that key in a pre-prod environment. Note that with a Limited key, the number of User-Generated Emote you can create is limited, to avoid issues.

To request an authentification Game API Key on the Developer Portal, you need to have a game space created. If you do not already have a game space created, head to your dashboard, and create your game space by clicking on "Create a game".

Then, click on the "Get API key" button, below "Activate your Game/App API Key.

Copy and save your Game API Key. You will have to use it soon!

CAUTION: your Game API Key will be displayed only once. It is crucial that you copy and save it before closing the pop-up, otherwise you will have to restart the process!

Get started with the Unity SDK in under 10 minutes.

Next, we'll finalize it to provide you with a complete template for integrating UGE into your game:

Let's review the different steps of the code together!

Before Initializing the SDK (which is an asynchronous process), you can register a callback to call subsequent functions of the SDK:

Then, you can initialize the SDK with the configuration you want:

Your character has to be an humanoid

We will focus on this part of the code:

We use the ConnectAccount method, establishing a link between the user account on your end and within Kinetix's system.

The userID you give to Kinetix must be reused each time you want to connect this specific user, and it must remain exclusive to them.

While it can be a hash or any string, we highly recommend anonymizing user data and refraining from incorporating any personal information.

Let's check the following code (inside the success callback of ConnectAccount)

As you can see, we provide a way to know when a link / token to the Web Application is invalid (either because of the security token expiration or after usage).

To get your player's emotes, you can call GetUserAnimationMetadatas, which will return an array of AnimationMetadata, containing all the info you will ever need (name, thumbnail, file links, etc...)

As you can see you can get the Id of an emote by accessing the Ids.UUID property of an AnimationMetadata

Once we have the id of the emote we want to play (and the local player has been registered), we can just call:

Kinetix's SDK uses API keys to authenticate Emote requests. You can view and manage your API key in the .

Register on to generate your Game API Key and start leveraging Kinetix's User-Generated Emote feature.

Unlimited - Free key used for production purposes. Access to the key is free but depending on your usage you might be charged based on our policy. To obtain an Unlimited key, head to your dashboard on the Developer Portal, and click on "Upgrade to Unlimited", in the "Monitoring" section.

If you followed the section, your script should look something like this:

More info:

To play emotes, you can use an already configured character from your game which has an animator for exemple (custom animation systems are supported, please visit for more info) You can call the RegisterLocalPlayerAnimator method to easily register a character

If you uploaded an avatar in our , you can also pass the avatarID matching the player character you registered to benefit from the Contact-Aware Retargeting.

More info:

More info:

You can then call a method to get the link to the Web Application, and attach it to a script to open the web browser (for example with )

More info:

More info:

More info:

You should be ready to use the SDK now! We encourage you to visit to expand your knowledge of the SDK or our to check what methods are available.

Our SDK allows comprehensive control over user-generated emotes with features such as trimming animations, pausing/resuming, setting elapsed time, modifying play rates, and looping animations. Note that these features are not automatically networked; you must send the relevant information over your network layer and call the appropriate methods using our "Remote implementation."

Please note that these features aren't automatically networked. You have to send the information over your network layer, and call the relevant methods via our "Remote implemenation"

Trimming animation allows you to choose a part of the emote to be played, instead of playing the whole one. Remove a part of the start of the animation, at the end of the animation or both simultaneously.

Example:

Let's say the emote is 10 seconds long and you want to play the animation from '3:00' to '5:00'

Example 2:

Let's say the emote is 10 seconds long and you want to play the animation from 3:00 to the end of the animation.

Example:

Let's say the emote is 10 seconds long and you want to play the animation from '3:00' to '5:00'

Example 2:

Let's say the emote is 10 seconds long and you want to play the animation from 3:00 to the end of the animation.

Example:

Let's say the emote is 10 seconds long and you want to play the animation from '3:00' to '5:00'

Example 2:

Let's say the emote is 10 seconds long and you want to play the animation from 3:00 to the end of the animation.

Resume and pause the playing of an emote, freezing the player avatar. The SDK will keep its state. (elapsed time, loop mode, play rate, animation timeline, etc...)

If _Paused is true, the SDK will pause the emote.

If _Paused is false, the SDK will resume the playing of the emote.

If _Paused is true, the SDK will pause the emote.

If _Paused is false, the SDK will resume the playing of the emote.

Example:

If _Paused is true, the SDK will pause the emote.

If _Paused is false, the SDK will resume the playing of the emote.

Example:

Enables you to go to a certain time of the sampler. The elapsed time is expressed in seconds.

Example:

Example:

Modify the speed at which frames are read by the SDK. A negative number will make the SDK play the emote backward.

The SDK depends on the UnityEngine.Time.timeScale

Example:

Example:

Example:

Enable or disable loop mode for a player. When loop mode is enabled, the SDK will loop back to the start / end of the animation (depending on the playrate's sign)

Example:

Example:

The purpose of this page is to lead you through the integration of the User-Generated Emote feature using the Kinetix SDK API.

Using Kinetix Core, get the link to the Web Application that will let your players upload or record their video

Once in the web application, gamers can either record or upload a video to create their own Emote.

Once the video is uploaded, gamers can trim the portion of video they wish to extract to create their emote

After trimming the video, gamers can give an name to their emote, specify its suitability for a mature audience (avoiding any trolling, sexual or hateful behaviors), and consent to the Terms & Conditions.

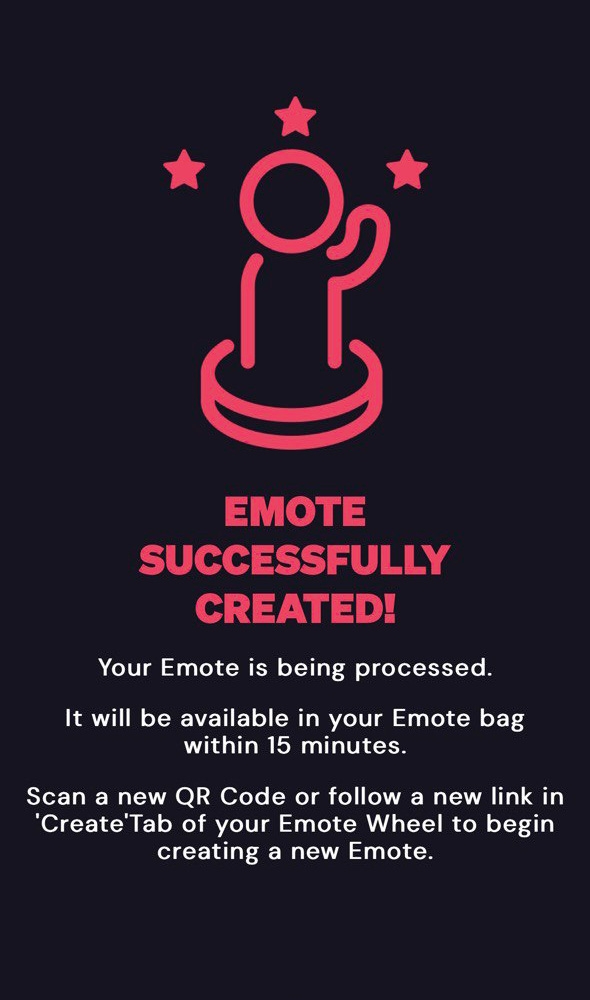

Et voilà!

After successfully submitting the Emote, gamers can return to playing the game and wait for the end of the processing of the Emote (average wait time: 5 minutes).

The emote will be available automatically when fetching the user's emotes via

If you want to let your gamers create their own Emotes, you can either use the SDK exposed methods or use our Web API endpoints:

Please make sure to read first

The goal here is to propose an implementation of the Root Motion system for controllers using a NavMeshAgent.

Please note that this feature is experimental and may require some adjustments on the implementation to work.

In this exemple the player controller is organized as follow:

PlayerObject (contains the NavMeshAgent and the script VisualAvatar)

AvatarObject ("root" -> contains the Animator)

Mesh

Armature

Hips

When used, the root motion will transfer any movement of the Hips to the AvatarObject (the root).

The script VisualAvatar is responsible for updating the position of the PlayerObject from the position of the AvatarObject (see below)

Please note 3 things here:

m_visual is the transform of the AvatarObject

The position update must be in LateUpdate, as the Root Motion system operates in the Update state of Unity

In a networked environment, we want to set the position of the PlayerObject only if we are the owner of it

Remove a part of the start of the animation. Remove a part of the end of the animation. Or both simultaneously.

Pause the playing of the animation, freezing the avatar in place

Go to a specific point of the animation (in seconds)

Speed at which animation is played. Can be set to negative in order to play the animation backward

Enable or Disable the loop mode of the Kinetix-Animator

Exemple of Photon Fusion integration

This example only works in SHARED game mode

Root motion, if enabled, makes the parent object (the one with the Animator component) move instead of the hips of the armature. This allows collision detection in the implementation of the character controller.

Please note that this feature is experimental and may require some adjustments on the implementation to work.

Root motion is enabled for a character via the following overloads when registering the players' animators:

And

Example of configuration:

A few options are available :

ApplyHipsYPos => Sets the root Y position to be the hips Y local position

ApplyHipsXAndZPos => Sets the root X and Z positions to be the hips X and Z position

BackToInitialPose => If set to true, the avatar will revert to its initial position when the emote has finished playing

BakeIntoPoseXZ => If set to true, the emote will be played in place for the X and Z axis, meaning the avatar won't move horizontally during the animation

BakeIntoPoseY => If set to true, the emote will be played in place for the Y axis, meaning the avatar won't move vertically during the animation

Create an Avatar mask.

Follow these steps in project view :

Right Click> Create > Kinetix > AvatarMask

In the inspector, you can click on each individual node to enable (in green) or disable (in red) the bone. Hovering the node will show the name of the bone.

For the Mask to be applied on a character, you need to call the corrisponding SetMask method.

Example:

Example:

The KinetixMask can be modified in runtime using the method SetEnabled

Example:

Exemple of Photon PUN integration

PhotonConnectManager is a global script attached one time to get information if the local player joined a room and then instantiate its prefab.

Player is a script attached to the player prefab and will register and unregister if its local player or a remote player.

Reference for the Kinetix Core SDK.

This page is about a beta feature, that may not be stable yet

Our SDK allows inverse kinematic control when playing a kinetix animation. The IK has multiple features such as :

Hint (or "Pole Vector"): These are positions like "left knee" or "right elbow" used by the IK to orient the elbow or knee in the correct direction.

Target: These are, as their name suggest, the target position and rotation for a hand or foot.

Auto-retargeted hand and foot rotation : Our IK ensure that if you have multiple rigs in your virtual world, the IK rotation will be the same for both characters.

Adjust Hips : This feature let you lock hands or foots into place by moving the hips

The IK target rotation is described as follow : For the hands, the forward vector points toward the fingers (we average each finger to get a forward direction). The down vector points toward the palm of the hand.

For the feet, the forward vector points toward the toes, parallel to the ground. The down vector points toward the knee. (based on the T-Pose)

Registering the OnBeforeIkEffect allows you bind to the Update of the Kinetix Animator before any IK is computed. This allows you to check for collisions or grounding and use IK methods.

Example:

Example:

Position weight showcase :

Position weight showcase :

These are positions like "left knee" or "right elbow" used by the IK to orient the elbow or knee in the correct direction.

Our IK ensure that if you have multiple rigs in your virtual world, the IK rotation will be the same for both characters. The global rotations are described as follow :

Rotation (and position) weight showcase :

Rotation (and position) weight showcase :

Rotation (and position) weight showcase :

This feature let you lock hands or foots into place by moving the hips

This example is based on

The IK target position use the root game object you provide us when in order to convert from global context to the avatar context.

This page is about a closed beta feature, get in touch with us if you wish to be part of the program and enable the validation flow.

Once the validation flow is activated, created emotes must be validated before appearing in the user's "bag" (KinetixCore.Metadata.GetUserAnimationMetadatas)

Validating an emote will make it retrievable via the user's account (KinetixCore.Metadata.GetUserAnimationMetadatas)

Before validating the emote, you may want to show it to the player:

If a user is unsatisfied with their emote, you can allow them to replace it with a new emote, invalidating the previous process and restarting the flow from the begining with the old process as a parent.

Once installed, you can follow these steps to integrate it and open the Kinetix PWA:

Grab the prefab "CanvasWebViewPrefab" from the demo scenes of the plugin

Use the following code to get the PWA url and open it

Detecting the end of the flow in the PWA can be done in a variety of ways, but the simplest is to poll the user's processes to detect if a new emote is being processed

To detect a new valid Process, you can check the length of the processes after filtering them thanks to the property CanBeValidatedOrRejected

Although the PWA can be opened in a new browser tab (with Application.OpenURL for example), we recommend finding a way to embed it directly in your app for UX reasons. As an example, we will demonstrate using the plugin "3D WebView for Android and iOS (Web Browser)" ().

OnUpdatedAccount

None

Called upon updated informations on account.

OnConnectedAccount

None

Called upon connected account.

OnDisconnectedAccount

None

Called upon disconnected account.

ConnectAccount(

string _UserId,

Action _OnSuccess = null,

Action _OnFailure = null

)

void

Connect account with UserId

DisconnectAccount()

void

Disconnect connected account

This main class is used for SDK initialization.

Added:

Improvements on Animation Load methods allowing you to get the loaded KinetixClip and know when the full emote is downloaded and ready to use

_____________________________________________________

Added:

Kinetix Mask now allows you to select parts of the avatars that you want to stop the animation on

You can now pass an avatar separately from the animator, allowing more compatibility with projects requiring a Generic avatar

Added more samples for IK and Validation flow (still in beta)

Fixed:

Loading multiple animations at the same time no longer assign the same data to all

SDK now fully functions with SynchronisationContext.SetSynchronisationContext

_____________________________________________________

Added:

_____________________________________________________

Fixed:

RetakeEmoteProcess now passes the full URL for the PWA instead of just a token.

_____________________________________________________

Added:

Manual cache clearing for UGC Url

Fixed:

RootMotion not applying

_____________________________________________________

Fixed:

Animator not being enabled back after emote being played

_____________________________________________________

Added :

[Closed Beta] Integrated API routes for validation and retake

Download of emote file now scales with the user's bandwith, allowing for a better control

Fixed:

SDK now allows for blendshape editing while animation is playing

_____________________________________________________

Added :

_____________________________________________________

Added :

Custom retargeting emotes are now loaded with their own thumbnails

Removed :

Obsolete method AssociateEmotesToUser

Alias features

_____________________________________________________

Fixed :

Edge case where an emote could not be played a second time

_____________________________________________________

Fixed :

Fixed Demo scene script wrong "using" statement

Fixed assembly loading bug

_____________________________________________________

Fixed :

Demo scene is now in the samples

Fixed a wrong "using" statement

_____________________________________________________

Added :

SDK now fully supports in-house kinanim format, allowing Emote streaming.

New networking implementation, easier and removing most costs on the game developper's side

Removed :

Support for Emote Wheel UI

Support for Alias and Contextual features

_____________________________________________________

Added :

SDK now fully supports Contact Aware Retargeting implementation: upload your avatar in the Developer Portal.

Fixed :

Fixed an issue where a specific rig hierarchy could cause a crash

_____________________________________________________

Added :

Alias system to fetch emotes directly from portal via aliases.

_____________________________________________________

Added :

Added Multi Character management to play animations on your avatar, NPCs, or within your in-game shop

Added Smart Cache to avoid overloading the storage

Updated :

Improved Retargeting performances

Fixed :

Fixed an issue of transition through the ground on some edge cases

_____________________________________________________

Updated :

Fix and improvements on Legacy AnimationClip behaviours

_____________________________________________________

Updated :

Global Core Refacto and Stabilization

Improved Ground Contact in Retargeting System

_____________________________________________________

Fixed :

It's now possible to call UnregisterLocalPlayer just after registering it.

We have added a safeguard after multiple register on LocalPlayerCharacter and RemotePlayerCharacter.

Fixed an issue that didn't dispose components on GameObject while calling UnregisterLocalPlayer.

_____________________________________________________

Fixed :

Improved general stability on fetching emotes

Improved Gamepad Controller behaviours

_____________________________________________________

Added :

Context Module to play emotes on specific context

Fixed :

Misc bug fixes and performance improvements

_____________________________________________________

Added :

Create UGC Emote with Companion in the new Create tab

Assign Verified Emotes to your users

Web2 user account handling

Kinetix SDK API interaction

Hide/Show Emote Wheel tabs

Updated :

New batch of 3 test Emotes

_____________________________________________________

Updated :

Improved retargeting on high ratio avatar differences

Removed "Open" action from Input Action Map

_____________________________________________________

Added :

Kinetix Package Manager to install modules of the SDK (Core, UI Common and UI Emote Wheel)

New Input system compliancy and new Input Customizer that let you customize inputs for any controllers. We do now support GamePad from scratch in addition to Touches and Mouse Input.

New example Photon Fusion for the network system integration

Updated :

Full rework on the network system that increase scalability and memory usage.

Update From 0.4 :

We have reworked the Network System for scability concerns. We have removed methods that allow to play an animation on remote peer. You can now synchronize the frame poses directly through your network system by using our Serializer and Deserializer (You can read more informations on Network Synchronization part)

We have removed the parameters MaxPersistentDataStorageInMB and MaxRamCacheInMB in KinetixCoreConfiguration for flexibility. By using our UI Emote Wheel, emotes will be free from memory by design. Otherwise you can call the Unload method in our API to free the memory.

_____________________________________________________

Fixed :

UGC emotes are now centered on the avatar

Pages Label on the inventory is now refreshed

The error "'Animator' does not contain a definition for 'keepAnimatorStateOnDisable'" has been fixed.

_____________________________________________________

Added :

Root Motion System

UI Customization (Light Theme, Dark Theme and Custom Theme)

Localization System

New Web2 package

Updated :

Removed "AddFreeAnimation" method from Account class. Refer to "Import Free Emotes" section in documentation.

Updated UI Initialization directly through the class KinetixUIEmoteWheelConfiguration in the KinetixUI initialization. Refer to "UI Integration" section in documentation.

Update From 0.3.0 :

If you were using "AddFreeAnimation" method, you can import animations locally now through our custom editor to import them locally.

If you were using the ScriptableObject ConfigurationUI, you can transfer your initialisation informations in parameter of KinetixUI.Initialize method.

_____________________________________________________

Added :

MaxPersistentDataStorageInMB added in KinetixCoreConfiguration

MaxRamCacheInMB added in KinetixCoreConfiguration

Updated :

Improved Foot Correction Retargeting

Improved Memory Management

Reduced Emote Loading duration on remote peers

No more specific version of Newtonsoft required

Ik layer is now in open beta, see

FrameController - introducing new features: Pause/Resume, Loop, Go To Time, Adjustable Playrate / Play backward, Trim animation (Play subpart of emote). See

OnRegisteredLocalPlayer

None

Called upon updated informations on account.

OnPlayedAnimationLocalPlayer

AnimationIds

Called upon connected account.

OnPlayedAnimationQueueLocalPlayer

AnimationIds[]

Called upon animation queue is played from local player

OnAnimationStartOnLocalPlayerAnimator

AnimationIds

Called upon animation starts on animator

OnAnimationEndOnLocalPlayerAnimator

AnimationIds

Called upon animation ends on animator

RegisterLocalPlayerAnimator(

Animator _Animator

)

void

Register Local Player Animator

RegisterLocalPlayerAnimator(

Animator _Animator,

RootMotionConfig _Config

)

void

Register Local Player Animator with a Root Motion configuration

RegisterLocalPlayerCustom(

DataBoneTransform _Root,

Transform _RootTransform,

IPoseInterpreter _PoseInterpreter

)

void

Advanced registration of Local Player for custom behaviour

RegisterLocalPlayerCustom(

DataBoneTransform _Root,

Transform _RootTransform,

IPoseInterpreter _PoseInterpreter,

RootMotionConfig _Config

)

void

Advanced registration of Local Player for custom behaviour with a Root Motion configuration

RegisterLocalPlayerCustom(

Avatar _Avatar,

Transform _RootTransform,

ExportType _ExportType

)

void

Advanced registration of Local player with an Avatar Component.

RegisterAvatarAnimator(

Animator _Animator

)

string

Register an avatar for non local player [Returns : ID of the avatar]

RegisterAvatarAnimator(

Animator _Animator,

RootMotionConfig _Config

)

string

Register an avatar for non local player with Root Motion configuration [Returns : ID of the avatar]

RegisterAvatarCustom(

DataBoneTransform _Root,

Transform _RootTransform,

IPoseInterpreter _PoseInterpreter

)

string

Advanced registration of non Local Player for custom behaviour [Returns : ID of the avatar]

RegisterAvatarCustom(

DataBoneTransform _Root,

Transform _RootTransform,

IPoseInterpreter _PoseInterpreter,

RootMotionConfig _Config

)

string

Advanced registration of non Local Player for custom behaviour with a Root Motion configuration [Returns : ID of the avatar]

RegisterAvatarCustom(

Avatar _Avatar,

Transform _RootTransform,

ExportType _ExportType

)

string

Advanced registration of non Local Player with an Avatar Component. [Returns : ID of the avatar]

UnregisterLocalPlayer()

void

Unregister the Local Player

UnregisterAvatar(string _PlayerUUID)

void

Unregister a given NPC / Avatar

PlayAnimationOnLocalPlayer(

AnimationIds _AnimationIds

)

void

Play animation on Local Player

PlayAnimationOnLocalPlayer(

string _EmoteID

)

void

Play animation on Local Player

PlayAnimationOnAvatar( string _PlayerUUID,

AnimationIds _AnimationIds

)

void

Play animation on Avatar

PlayAnimationOnAvatar( string _PlayerUUID,

string _EmoteID

)

void

Play animation on Avatar

PlayAnimationQueueOnLocalPlayer(

AnimationIds[] _AnimationIds,

bool _Loop

)

void

Play animation queue on Local Player

PlayAnimationQueueOnLocalPlayer(

string[] _EmoteIDs,

bool _Loop

)

void

Play animation queue on Local Player

GetRetargetedKinetixClipFor

LocalPlayer(

AnimationIds _AnimationIds,

Action<KinetixClip> _OnSuccess,

Action _OnFailure

)

void

Get Retargeted KinetixClip for local player

GetRetargetedKinetixClipFor

LocalPlayer(

string _EmoteID,

Action<KinetixClip> _OnSuccess,

Action _OnFailure

)

void

Get Retargeted KinetixClip for local player

GetRetargetedAnimationClip

LegacyForLocalPlayer(

AnimationIds _AnimationIds,

Action<AnimationClip> _OnSuccess,

Action _OnFailure

)

void

Get Retargeted AnimationClip Legacy for local player

GetRetargetedAnimationClip

LegacyForLocalPlayer(

string _EmoteID,

Action<AnimationClip> _OnSuccess,

Action _OnFailure

)

void

Get Retargeted AnimationClip Legacy for local player

StopAnimationOnLocalPlayer()

void

Stop animation on local player

LoadLocalPlayerAnimation(

AnimationIds _AnimationIds, string _LockID,

Action _OnSuccess

)

void

Load a local player animation

LoadLocalPlayerAnimation(

string _EmoteID, string _LockID,

Action _OnSuccess

)

void

Load a local player animation

LoadLocalPlayerAnimations(

AnimationIds[] _AnimationIds, string _LockID,

Action _OnSuccess

)

void

Load local player animations

LoadLocalPlayerAnimations(

string[] _EmoteIDs, string _LockID,

Action _OnSuccess

)

void

Load local player animations

LoadAvatarAnimation( string _PlayerUUID

AnimationIds _AnimationIds, string _LockID,

Action _OnSuccess

)

void

Load an avatar animation

LoadAvatarAnimation( string _PlayerUUID

string _EmoteID, string _LockID,

Action _OnSuccess

)

void

Load an avatar animation

LoadAvatarAnimations( string _PlayerUUID

AnimationIds[] _AnimationIds, string _LockID,

Action _OnSuccess

)

void

Load avatar animations

LoadAvatarAnimations( string _PlayerUUID

string[] _EmoteIDs, string _LockID,

Action _OnSuccess

)

void

Load avatar animations

UnloadLocalPlayerAnimation(

AnimationIds _AnimationIds, string _LockID,

)

void

Unload a local player animation

UnloadLocalPlayerAnimation(

string _EmoteID, string _LockID,

)

void

Unload a local player animation

UnloadLocalPlayerAnimations(

AnimationIds[] _AnimationIds, string _LockID,

Action _OnSuccess

)

void

Unload local player animations

UnloadLocalPlayerAnimations(

string[] _EmoteID, string _LockID,

Action _OnSuccess

)

void

Unload local player animations

UnloadAvatarAnimations( string _PlayerUUID

AnimationIds[] _AnimationIds, string _LockID,

Action _OnSuccess

)

void

Unload avatar animations

UnloadAvatarAnimations( string _PlayerUUID

string[] _EmoteIDs, string _LockID,

Action _OnSuccess

)

void

Unload avatar animations

IsAnimationAvailable

OnLocalPlayer(

AnimationIds _AnimationIds

)

bool

Check if animation ready to be play on Local Player [Returns : True if loaded]

IsAnimationAvailable

OnLocalPlayer(

string _EmoteID

)

bool

Check if animation ready to be play on Local Player [Returns : True if loaded]

GetNotifiedOnAnimationReady

OnLocalPlayer(

AnimationIds _AnimationIds,

Action _OnSuccess

)

void

Get notified when an animation is ready on local player

GetNotifiedOnAnimationReady

OnLocalPlayer(

string _EmoteID,

Action _OnSuccess

)

void

Get notified when an animation is ready on local player

GetLocalKCC()

Kinetix

Character

ComponentLocal

Get the Local Kinetix Character Component [Returns : Local Kinetix Character Component]

GetPlayerList()

List<string>

Get a Ids list of all registered avatars [Returns : Ids list of registered avatars]

IsLocalPlayedRegistered()

bool

Check if local player is registered [Returns : true if local played is registered]

SetConfiguration(

KinetixNetworkConfiguration _Configuration

)

void

Set the Kinetix Network Configuration

GetConfiguration()

Kinetix Network Configuration

Get the Kinetix Network Configuration

GetRemoteKCC(

string _RemotePeerUUID

)

Kinetix Character Component Remote

Get the Kinetix Character Component Remote based on the remote peer UUID

RegisterRemotePeerAnimator(

string _RemotePeerUUID,

Animator _Animator

)

void

Register remote peer animator based on remote peer UUID

RegisterRemotePeerCustom(

string _RemotePeerUUID,

DataBoneTransform _Root, Transform _RootTransform,

IPoseInterpreter _PoseInterpreter

)

void

Advanced register of remote peer avatar based on remote peer UUID

UnregisterRemotePeer(

string _RemotePeerUUID

)

void

Unregister remote peer based on peer UUID

UnregisterAllRemotePeers()

void

Unregister all the remote peers

GetAnimationMetadataByAnimationIds(

AnimationIds _AnimationIds,